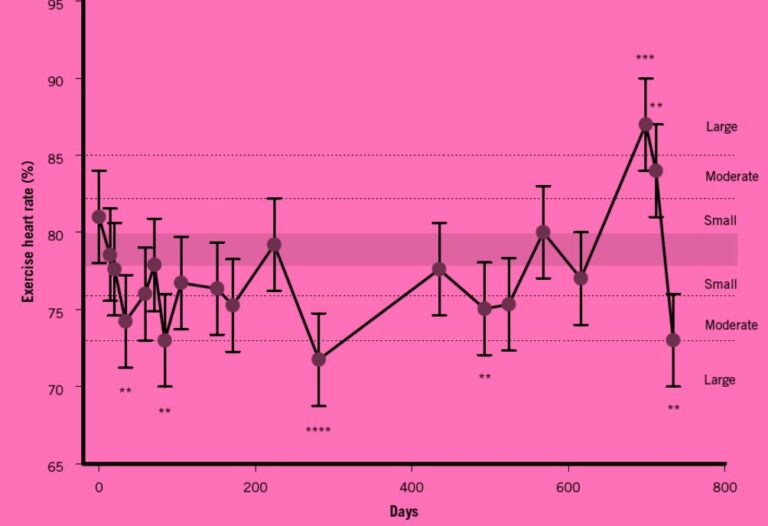

Factors associated with match outcomes in elite European football – insights from machine learning models

Settembre M, Buchheit M, Hader K, Hamill R, Tarascon A, Verheijen R, McHugh D. Factors associated with match outcomes in elite European football – insights from machine learning models....